The proliferation of “ReAct” (Reasoning and Acting) as a cornerstone of AI agents is undeniable. While there’s no shortage of AI tutorials on the internet, the signal-to-noise ratio in the AI hype-cycle is significant. So I set out to understand ReAct from first principles and build a ReAct agent from its foundational components - no frameworks, no platforms, no products, just standard Python libraries. I was pleasantly surprised by how simple it actually is and Tiny ReAct is the result of that effort.

The Agent Structure

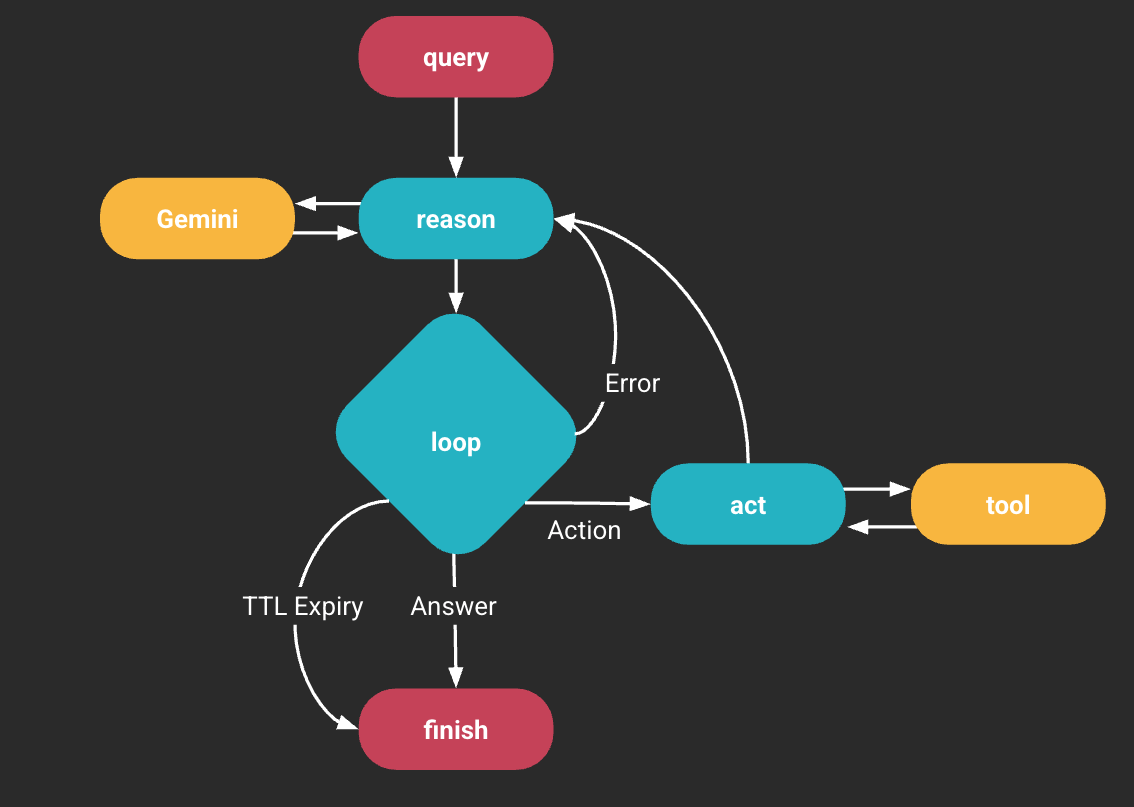

A ReAct loop is a few simple components:

- A query that starts the ReAct loop

- A reasoning step that queries and LLM for the action to take

- An acting step where that action translates to a tool/function call that pulls in external data into the ReAct loop

- Finally this iterates in a loop until the agent identifies an answer or reaches its TTL

Here’s the skeleton of our Tiny ReAct agent, showing just the method signatures to give you an overview of how it works:

class TinyReAct:

def __init__(self, ttl=5, tools=None):

# Initialize the agent with tools and settings

...

def query(self, query: str) -> str:

# Handle the main query loop

...

def iterate(self) -> tuple[str, bool]:

# Process one iteration of reasoning and acting

...

def reason(self) -> str:

# Get the model's reasoning

...

def act(self, function_call: str) -> str:

# Execute a tool call

...

That’s it! No fancy frameworks, no complex architecture - just a simple class that follows the ReAct pattern: Reason → Act → Observe → Repeat.

The Methods in Detail

1. Initialization

The __init__ method is where we set up our agent. It takes two parameters:

ttl(time-to-live): A safety mechanism to prevent infinite loopstools: A list of functions the agent can use to interact with the world

The method initializes the conversation history, sets up the Gemini client for reasoning, and creates the instruction prompt by dynamically incorporating the available tools. This prompt will guide the agent’s behavior throughout its operation.

def __init__(self, ttl=5, tools=None):

self.messages = []

self.iteration = 0

self.ttl = ttl

self.tools = tools

# Initialize Gemini client

load_dotenv()

api_key = os.getenv("GEMINI_API_KEY")

self.client = genai.Client(api_key=api_key)

# Format the instruction prompt with available tools

tool_prompt = ""

for function_name in self.tools:

tool_prompt += f"\n\n{function_name.__name__}{inspect.signature(function_name)}{function_name.__doc__}"

self.instruction_prompt = instruction_prompt_template.format(tool_prompt=tool_prompt)

2. The Query Loop

The query method is the main entry point for our agent. It takes a user’s question and starts the reasoning loop. This method:

- Initializes the conversation with the instruction prompt and the user’s query

- Enters a loop that continues until the agent either:

- Reaches a final answer

- Encounters an error

- Hits the iteration limit

The method uses color coding to make the output more readable, with different colors for the query, thoughts, actions, and observations.

def query(self, query: str) -> str:

self.messages = [{

"role": "user",

"parts": [{"text": self.instruction_prompt + "\n\n#THINKING TRACE\n\nQuery: " + query + "\n"}]

}]

print(f"{TextColor.QUERY}Query: {query}\n{TextColor.RESET}")

while True:

result, continue_loop = self.iterate()

print(f"{result}\n")

if not continue_loop:

break

return self.message_history()

3. The Iteration Logic

The iterate method is where the magic happens! It processes one complete cycle of the ReAct pattern. For each iteration, it:

- Checks if we’ve hit the iteration limit

- Gets the model’s reasoning about what to do next

- Either:

- Executes an action if the model wants to do something

- Returns the final answer if the model has one

- Handles any errors that occur

The method uses color coding to make the output more readable and maintains the conversation history for context.

def iterate(self) -> tuple[str, bool]:

if self.iteration >= self.ttl:

return f"{TextColor.TERMINATE}Terminate: Reached maximum number of iterations{TextColor.RESET}", False

reasoning = self.reason()

if not reasoning:

return "Error: No response from model", False

elif "Answer" in reasoning:

return f"{TextColor.ANSWER}{reasoning}{TextColor.RESET}", False

elif "Action" in reasoning:

self.iteration += 1

action_line = [line.strip() for line in reasoning.split('\n') if line.startswith("Action")][0]

function_call = action_line.split(":")[1].strip()

observation = self.act(function_call)

return f"{TextColor.MODEL}{reasoning}{TextColor.RESET}\n{TextColor.OBSERVATION}{observation}{TextColor.RESET}", True

else:

return "Error: Answer or Action not found in model response", True

4. Reasoning and Acting

The reason and act methods handle the core functionality of our agent:

The reason method:

- Calls the Gemini model to get its reasoning about what to do next

- Adds the model’s response to the conversation history

- Handles any errors that might occur during the API call

The act method:

- Extracts the function name from the model’s action request

- Validates that the function exists in our tools list

- Executes the function and captures its result

- Adds the observation to the conversation history

def reason(self) -> str:

try:

response = self.client.models.generate_content(

contents=self.messages,

model="gemini-2.5-flash-preview-05-20"

)

self.messages.append({

"role": "model",

"parts": [{"text": response.text}]

})

return response.text

except Exception as e:

return f"Error calling Gemini API: {str(e)}"

def act(self, function_call: str) -> str:

function_name = function_call.split('(')[0].strip()

if function_name not in [func.__name__ for func in self.tools]:

return f"\nError: Function '{function_name}' is not in the list of available tools"

try:

result = eval(f"{function_call}")

observation = f"Observation{self.iteration}: {result}"

except Exception as e:

observation = f"\nError: Cannot execute function {function_call}: {str(e)}"

self.messages.append({

"role": "user",

"parts": [{"text": observation}]

})

return observation

The Instruction Prompt

The instruction prompt is like the agent’s personality and rulebook rolled into one. It tells the agent:

- How to structure its thinking (using the ReAct pattern)

- What tools it has available

- What rules it must follow

- How to format its responses

The prompt is crucial because it guides the model’s behavior and ensures it follows the ReAct pattern correctly. We dynamically insert the available tools into the prompt, so the agent knows exactly what it can do.

instruction_prompt_template = """

Use the following thinking trace pattern and tools to solve the problem in a number of steps.

# TOOLS

{tool_prompt}

# EXAMPLE THINKING TRACE

## INPUT

Query: What is 10 * 3 + 10?

Thought1: First, I need to multiply 10 by 3.

Action1: multiply_numbers(10, 3)

Observation1: 30

## OUTPUT

Thought2: Now I need to add 10 to this result.

Action2: add_numbers(30, 10)

# RULES

1. Do not make up observations. Observations should only be provided as input, never as output.

2. One action per turn. Do not make multiple tool calls or skip steps. Each response should only contain a single Thought, Action, or Answer.

3. Think out loud and feel free to be expressive and explain your thought process in each Thought. Each Thought should be a minimum of 3 sentences.

4. Denote the iteration of each step (as-in Thought1, Action1)

5. When you have the final answer, and ONLY then, use the format:

Answer: [your final answer]

6. When you have the final answer, rephrase the answer in the context of the original query, and briefly summarize the work you did, listing them as numbered steps.

7. If you cannot get the correct information from a tool, try different variations of the query. After 5 attempts, return an error.

8. Stick to the format as shown.

"""

This prompt template is designed to:

- Show a clear example of how to structure the thinking process

- Provide explicit rules about what the agent can and cannot do

- Ensure the agent explains its reasoning thoroughly

- Maintain a consistent format throughout the conversation

- Handle errors gracefully

- Provide clear, well-structured final answers

The {tool_prompt} placeholder is replaced with the actual tools available to the agent, including their function signatures and documentation. This helps the agent understand exactly what tools it has at its disposal and how to use them correctly.

Example Output

Let’s see our agent in action with a more complex problem that requires multiple steps and different types of tools. Notice how it:

- Breaks down the problem into logical steps

- Handles errors gracefully by trying alternative approaches

- Combines information from different sources

- Performs necessary calculations

- Provides a clear final answer with a summary

Query: What is the current tempurature (in fahrenheit) in the city that won the superbowl in 1995?

Thought1: The first step is to identify which city won the Super Bowl in 1995. I will use Wikipedia to search for "Super Bowl 1995 winner" to get this information. This will help me narrow down the location for which I need to find the temperature.

Action1: wikipedia_summary("Super Bowl 1995 winner", 2)

Observation1: Wikipedia page for 'Super Bowl 1995 winner' not found.

Thought2: My previous search for "Super Bowl 1995 winner" failed, indicating that the specific phrasing might not match a Wikipedia page title. I need to find the winner of the 1995 Super Bowl, which is officially Super Bowl XXIX. I will try searching for "Super Bowl XXIX" to find the relevant information about the winning team and, consequently, their city.

Action2: wikipedia_summary("Super Bowl XXIX", 2)

Observation2: Super Bowl XXIX was an American football game between the American Football Conference (AFC) champion San Diego Chargers and the National Football Conference (NFC) champion San Francisco 49ers to decide the National Football League (NFL) champion for the 1994 season. The 49ers defeated the Chargers by a score of 49–26, the most points ever earned in a Super Bowl game, becoming the first team to win five Super Bowl championships.

Thought3: I have successfully identified the winning team of Super Bowl XXIX as the San Francisco 49ers. Now, I need to find the current temperature in San Francisco. To do this, I first need to get the geographical coordinates (latitude and longitude) of San Francisco. I will use the `wikipedia_coordinates` tool with "San Francisco" as the page title to obtain these coordinates.

Action3: wikipedia_coordinates("San Francisco")

Observation3: (Decimal('37.78333332999999782941813464276492595672607421875'), Decimal('-122.4166666699999979073254507966339588165283203125'))

Thought4: I have successfully retrieved the coordinates for San Francisco: latitude 37.78333333 and longitude -122.41666667. Now that I have the coordinates, I can use the `get_temperature` tool to find the current temperature in San Francisco. The question asks for the temperature in Fahrenheit, but the tool provides the temperature without a unit, so I will assume it's in the standard unit for the tool, which is usually Celsius or Fahrenheit depending on the backend. I will provide the coordinates to the tool.

Action4: get_temperature(37.78333333, -122.41666667)

Observation4: The temperature at 37.78333333, -122.41666667 is 10.290999412536621°C

Thought5: I have obtained the temperature in Celsius, which is 10.290999412536621°C. The original query asked for the temperature in Fahrenheit. Therefore, I need to convert this Celsius temperature to Fahrenheit using the formula F = C * 9/5 + 32. I will first multiply 10.290999412536621 by 9/5 and then add 32 to the result.

Action5: multiply_numbers(10.290999412536621, 1.8)

Observation5: 18.523798942565918

Thought6: I have calculated the first part of the Fahrenheit conversion, which is 10.290999412536621 * 1.8 = 18.523798942565918. The final step in converting Celsius to Fahrenheit is to add 32 to this value. I will now use the `add_numbers` tool to complete this calculation and get the final temperature in Fahrenheit.

Action6: add_numbers(18.523798942565918, 32)

Observation6: 50.52379894256592

Answer: The current temperature in the city that won the Super Bowl in 1995 is approximately 50.52 degrees Fahrenheit.

To summarize the steps:

1. Identified that the San Francisco 49ers won Super Bowl XXIX (1995).

2. Obtained the geographical coordinates for San Francisco.

3. Retrieved the temperature for San Francisco in Celsius.

4. Converted the temperature from Celsius to Fahrenheit.

Lessons Learned

Simplicity is Key: ReAct is surprisingly simple to implement. The core pattern is just a loop of reasoning and acting. You don’t need complex frameworks or architectures to get started.

Prompt Engineering Matters: The instruction prompt is crucial. A well-crafted prompt can make the difference between a confused agent and one that solves problems effectively. Take time to refine your prompt and test it with different types of problems.

Error Handling is Essential: Things will go wrong - the model might give unexpected responses, tools might fail, etc. Good error handling keeps the agent running smoothly and provides helpful feedback when something goes wrong.

Color Coding Helps: Adding color to different types of messages (thoughts, actions, observations) makes the output much more readable. It helps you understand what the agent is doing at a glance.

Tools are Powerful: The agent is only as good as its tools. Adding more tools (like Wikipedia search, weather API, etc.) makes it more capable. Think carefully about what tools your agent needs to solve its intended problems.

The full code is available on GitHub. Feel free to play with it, modify it, and make it your own. After all, the best way to understand something is to build it yourself!

Happy coding! 🚀