You can’t watch a keynote very long these days without hearing the word “agent”. Agent is so popular that the word has now actually lost its technical meaning and has been donated to the marketing team. But I’ll still give my most concise, technical definition - an agent is an application with the ability to make decisions, using AI, that influence the application’s control-flow.

Even more simply: agents have agency.

I started my own agent learning journey by building one myself. I quickly found that there is so much abstraction in all the chaining and agent frameworks, that it’s hard to understand what’s happening. So I decided build one from scratch - no frameworks, no products, just standard Python libraries (and a little help from Gemini). Tiny ReAct is the result of this effort, all in less than 100 lines of code!

The core concepts of how an agent works are actually quite simple. “ReAct” (Reasoning and Acting) was one of the first LLM-based agentic approaches that became popularized. I used the ReAct pattern and a set of simple tools (an “AI” term for Python function) that can make API calls for external information. The ultimate goal was an agent that could answer complex questions that require some external information, multiple steps, and a bit of decision-making to answer.

Let’s talk about the architecture of this ReAct agent …

🤖 Anatomy of an Agent

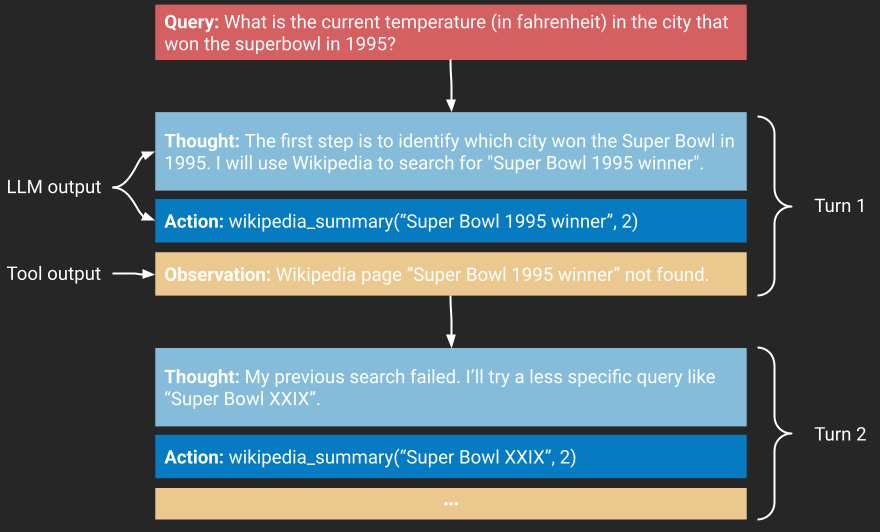

The simplest version of an agent is just an LLM that is called in a loop. Each loop (or “turn”) gets the full conversation history of the previous turns. This allows the LLM to make incremental progress towards a complex question, taking one step (or tool call) at a time. This full conversation history, including the initial instruction prompt, query, and every request/response pair, is called a reasoning trace.

The reasoning trace has three parts to it:

- Thought (reasoning): A dialogue generated by the LLM where it’s instructed to describe what it thinks it should do next to answer the query.

- Action: A tool/function call generated by the LLM, which is informed by its own reasoning dialogue.

- Observation: The output of that tool/function call. This part is not generated by the LLM. Rather, it’s injected into the reasoning trace and the LLM will see this output on the next turn.

A ReAct reasoning trace might look like the following flow. A triplet of thought, action, and observation is appended with each turn, until an answer is found.

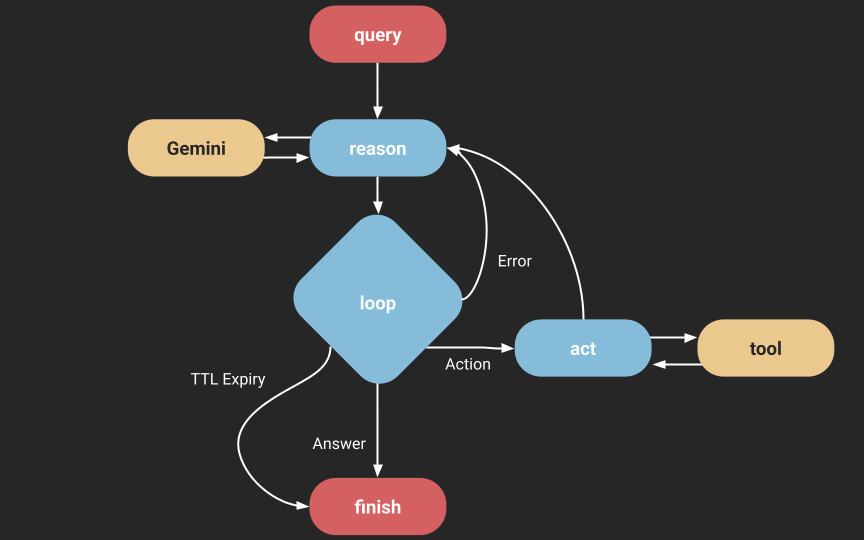

Now imagine on the next turn you are calling the LLM again and passing this conversation history in. The LLM will see the tool response in the most recent observation and decide what to do next. This loop ends up looking like the following diagram.

On each turn the agent has four possible outcomes:

- The LLM has identified the answer and the loop stops

- The LLM hits its expiry and the loop stops before getting an answer

- The LLM decides that it needs to make another tool call

- Or there was an error and if the LLM is smart enough, it may be able to recover from that error

Here’s the skeleton of our Tiny ReAct agent class. Its methods correspond with the ReAct loop: Reason → Act → Observe → Repeat.

class TinyReAct:

def __init__(self, ttl=5, tools=None):

# Initialize the agent with tools and settings

...

def query(self, query: str) -> str:

# Handle the main query loop

...

def iterate(self) -> tuple[str, bool]:

# Process one iteration of reasoning and acting

...

def reason(self) -> str:

# Genereate the LLM's reasoning and action

...

def act(self, function_call: str) -> str:

# Execute a tool call

...

🔧 The Agent in Detail

🚀 Agent Initialization

The __init__ method is where we set up our agent. There are three important things that happen here:

-

The

tracelist is initialized. This list will store our conversation history / reasoning trace. LLMs suffer from amnesia - they have no memory of previous calls. So we record each request & response outside of the LLM and pass it in to every LLM call. -

We initialize our LLM. In this case I’m using Google’s

gemini-2.5-flash-preview-05-20that will provide all the reasoning and acting outputs. -

We assemble our

instruction_prompt. The instruction prompt tells the LLM what to do and it also lists out the tools that are available to it. The LLM only knows what actions it can take because we tell it in theinstruction_prompt.

def __init__(self, ttl=5, tools=None):

self.trace = []

self.iteration = 0

self.ttl = ttl

self.tools = tools

# Initialize Gemini client

load_dotenv()

api_key = os.getenv("GEMINI_API_KEY")

self.client = genai.Client(api_key=api_key)

# Format the instruction prompt with available tools

tool_prompt = ""

for function_name in self.tools:

tool_prompt += f"\n\n{function_name.__name__}{inspect.signature(function_name)}{function_name.__doc__}"

self.instruction_prompt = instruction_prompt_template.format(tool_prompt=tool_prompt)

📖 Instruction Prompt

The instruction prompt is how we guide the LLM. It’s inserted as the first part of the reasoning trace so the LLM sees these instructions on every call. It has four parts:

- Instruction - The overall task description

- Tools - The list of tools that are available to the LLM. This is how the LLM knows what actions it can take. Each tool description is effectively a function signature along with descriptions of what the tool does and its input parameters. These descriptions inform the LLM which tool it should use for any given task.

- Examples - These are few-shot examples that provide more detail for how we want the generated output to be structured

- Rules - More specific directions on how it should process its input

# INSTRUCTION

Use the following thinking trace pattern and tools to solve the problem

in a number of steps.

Use the ReAct pattern of thinking, acting, and observing.

# TOOLS

wikipedia_summary(query: str, sentences: int = 10) -> str

Searches Wikipedia for a given query and returns a summary. Returns up to 10 sentences max.

parameter query: the search term or question for Wikipedia.

parameter sentences: the number of sentences to try and retrieve for the summary.

returns: a string containing the summary of the Wikipedia page, or an error message

if the page is not found or an error occurs.

example: wikipedia_summary("United States president", 5)

add_numbers(x: int, y: int) -> int

Adds two numbers.

parameter x: the first number to add.

parameter y: the second number to add.

returns: the sum of the two numbers.

example: add_numbers(4, 7)

... more tools ...

# EXAMPLE REASONING TRACE

Query: What is 10 * 3 + 10?

Thought1: First, I need to multiply 10 by 3.

Action1: multiply_numbers(10, 3)

Observation1: 30

# RULES

1. One action per turn. Do not make multiple tool calls or skip steps.

Each response should only contain a single Thought, Action, or Answer.

2. Think out loud and feel free to be expressive and explain your thought

process in each Thought.

3. Denote the iteration of each step (as-in Thought1, Action1)

4. When you have the final answer, and ONLY then, use the format:

Answer: [your final answer]

... more rules ...

"""

🎯 The Query Entrypoint

The query method is the entry point for our agent.

It is taking the user’s query along with the instruction prompt and it constructs the prompt for the very 1st LLM call. This is what kickstarts the loop.

It also contains our main agent loop, which will stop whenever continue_loop != True.

def query(self, query: str) -> str:

self.trace = [{

"role": "user",

"parts": [{"text": self.instruction_prompt + "\n\n#THINKING TRACE\n\nQuery: " + query + "\n"}]

}]

print(f"{TextColor.QUERY}Query: {query}\n{TextColor.RESET}")

while True:

result, continue_loop = self.iterate()

print(f"{result}\n")

if not continue_loop:

break

return self.message_history()

🧠 Reasoning and Acting

reason and act are doing most of the heavy lifting for our agent.

reason is what actually calls our LLM. It simply makes a call to Gemini by sending in the reasoning trace as the prompt. Note that on the very first turn, the prompt will just be the prompt instructions + the user’s query. On every subsequent turn, each new thought + action + observation will be appended so the LLM will see all dialogue, tool calls, and observation outputs from previous turns.

The act method does the following:

- Extracts the tool call from the text generated by the

reasonmethod - Validates that this tool actually exists

- It calls the tool

- Then it takes the tool output and appends it to the reasoning trace starting with the keyword

Observation

def reason(self) -> str:

try:

response = self.client.models.generate_content(

contents=self.trace,

model="gemini-2.5-flash-preview-05-20"

)

self.trace.append({

"role": "model",

"parts": [{"text": response.text}]

})

return response.text

except Exception as e:

return f"Error calling Gemini API: {str(e)}"

def act(self, function_call: str) -> str:

function_name = function_call.split('(')[0].strip()

if function_name not in [func.__name__ for func in self.tools]:

return f"\nError: Function '{function_name}' is not in the list of available tools"

try:

result = eval(f"{function_call}")

observation = f"Observation{self.iteration}: {result}"

except Exception as e:

observation = f"\nError: Cannot execute function {function_call}: {str(e)}"

self.trace.append({

"role": "user",

"parts": [{"text": observation}]

})

return observation

⚙️ Iteration Control-flow

iterate is where the ✨magic✨ happens! Remember our definition of an agent? An application that uses AI-decision making to influence the application’s control-flow.

The instruction_prompt tells the LLM to output specific keywords for each turn - either Action or Answer. These are the only two paths that the LLM is instructed to take. iterate extracts those exact strings, which then dictate which specific if-then branch is executed.

While seemingly magical from the outside, agents boil down to this exact pattern:

- An LLM generates strings with specific keywords

- Code looks for those exact keywords in the string

- Then the code takes different paths within its logic based on those keywords

def iterate(self) -> tuple[str, bool]:

if self.iteration >= self.ttl:

return f"{TextColor.TERMINATE}Terminate: Reached maximum number of iterations{TextColor.RESET}", False

reasoning = self.reason()

if not reasoning:

return "Error: No response from model", False

elif "Answer" in reasoning:

return f"{TextColor.ANSWER}{reasoning}{TextColor.RESET}", False

elif "Action" in reasoning:

self.iteration += 1

action_line = [line.strip() for line in reasoning.split('\n') if line.startswith("Action")][0]

function_call = action_line.split(":")[1].strip()

observation = self.act(function_call)

return f"{TextColor.MODEL}{reasoning}{TextColor.RESET}\n{TextColor.OBSERVATION}{observation}{TextColor.RESET}", True

else:

return "Error: Answer or Action not found in model response", True

🎬 Our Agent in Action

You can run this agent yourself by running agent.py from the Tiny ReAct GitHub repo:

python3 agent.py "What is the current tempurature (in fahrenheit) in the city that won the superbowl in 1995?"

In this case I gave our agent this set of tools:

add_numbers(x: int, y: int)subtract_numbers(x: int, y: int)multiply_numbers(x: int, y: int)divide_numbers(x: float, y: float)get_temperature(latitude: float, longitude: float)search_wikipedia_page(query: str)wikipedia_coordinates(page_title: str)wikipedia_summary(query: str, sentences: int = 10)

I’ve defined a pretty granular set of tools, mostly so I can test the model’s ability to use various tools together. In practice I might want to have more broadly-scoped tools, but tool granularity is a topic for another post …

Below is the full reasoning trace from asking our agent: What is the current tempurature (in fahrenheit) in the city that won the superbowl in 1995?. We can see that it takes six turns before it finds the answer. It breaks down the problem into individual steps using different tool calls to provide the information it needs. Then it gets the right answer - when I ran this it was indeed 50°F in San Francisco (and the 49ers won the Super Bowl in 1995)!

Query: What is the current tempurature (in fahrenheit) in the

city that won the superbowl in 1995?

Thought1: The first step is to identify which city won the Super Bowl in 1995. I will use Wikipedia to search for "Super Bowl 1995 winner" to get this information. This will help me narrow down the location for which I need to find the temperature.

Action1: wikipedia_summary("Super Bowl 1995 winner", 2)

Observation1: Wikipedia page for 'Super Bowl 1995 winner' not found.

Thought2: My previous search for "Super Bowl 1995 winner" failed, indicating that the specific phrasing might not match a Wikipedia page title. I need to find the winner of the 1995 Super Bowl, which is officially Super Bowl XXIX. I will try searching for "Super Bowl XXIX" to find the relevant information about the winning team and, consequently, their city.

Action2: wikipedia_summary("Super Bowl XXIX", 2)

Observation2: Super Bowl XXIX was an American football game between the American Football Conference (AFC) champion San Diego Chargers and the National Football Conference (NFC) champion San Francisco 49ers to decide the National Football League (NFL) champion for the 1994 season. The 49ers defeated the Chargers by a score of 49–26, the most points ever earned in a Super Bowl game, becoming the first team to win five Super Bowl championships.

Thought3: I have successfully identified the winning team of Super Bowl XXIX as the San Francisco 49ers. Now, I need to find the current temperature in San Francisco. To do this, I first need to get the geographical coordinates (latitude and longitude) of San Francisco. I will use the `wikipedia_coordinates` tool with "San Francisco" as the page title to obtain these coordinates.

Action3: wikipedia_coordinates("San Francisco")

Observation3: (Decimal('37.78333332999999782941813464276492595672607421875'), Decimal('-122.4166666699999979073254507966339588165283203125'))

Thought4: I have successfully retrieved the coordinates for San Francisco: latitude 37.78333333 and longitude -122.41666667. Now that I have the coordinates, I can use the `get_temperature` tool to find the current temperature in San Francisco. The question asks for the temperature in Fahrenheit, but the tool provides the temperature without a unit, so I will assume it's in the standard unit for the tool, which is usually Celsius or Fahrenheit depending on the backend. I will provide the coordinates to the tool.

Action4: get_temperature(37.78333333, -122.41666667)

Observation4: The temperature at 37.78333333, -122.41666667 is 10.290999412536621°C

Thought5: I have obtained the temperature in Celsius, which is 10.290999412536621°C. The original query asked for the temperature in Fahrenheit. Therefore, I need to convert this Celsius temperature to Fahrenheit using the formula F = C * 9/5 + 32. I will first multiply 10.290999412536621 by 9/5 and then add 32 to the result.

Action5: multiply_numbers(10.290999412536621, 1.8)

Observation5: 18.523798942565918

Thought6: I have calculated the first part of the Fahrenheit conversion, which is 10.290999412536621 * 1.8 = 18.523798942565918. The final step in converting Celsius to Fahrenheit is to add 32 to this value. I will now use the `add_numbers` tool to complete this calculation and get the final temperature in Fahrenheit.

Action6: add_numbers(18.523798942565918, 32)

Observation6: 50.52379894256592

Answer: The current temperature in the city that won the Super Bowl

in 1995 is approximately 50.52 degrees Fahrenheit.

To summarize the steps:

1. Identified that the San Francisco 49ers won Super Bowl XXIX (1995).

2. Obtained the geographical coordinates for San Francisco.

3. Retrieved the temperature for San Francisco in Celsius.

4. Converted the temperature from Celsius to Fahrenheit.

👀 Observations

That is ReAct in a nutshell! While building Tiny ReAct, I also came away with some interesting observations …

-

Ability to handle failures: I was most impressed by Gemini’s ability to handle failures. In it’s first turn it executed

wikipedia_summary("Super Bowl 1995 winner", 2), but the response didn’t include the winner. On the second turn it generated a more generic query in its action withwikipedia_summary("Super Bowl XXIX", 2). It changed its course of action based on a failure to get the right information in the first turn. This demonstrates the kind of flexibility that code alone could never provide. -

Simplicity of a prompt: The initial prompt instruction guides everything. Simple, clear, and concrete language goes a very long way. Just imagine that you are writing the instructions for a friend that you’re not allowed to communicate with. Would your prompt be clear enough for them to complete the task in exactly the structure and format you want it in? Your friends are not mind readers and neither are LLMs.

-

Emergent intelligence: The agent demonstrates how complex multi-step reasoning can emerge from simple tool calls. What looks like sophisticated problem-solving is actually just the LLM making sequential decisions about which tool to call next. The beauty is that each tool is simple and focused, but when orchestrated together through the agent’s reasoning, they can solve complex problems that require multiple steps and domain knowledge.

Overall I learned a lot by building Tiny ReAct. I hope that you learned something too!

Happy hacking 🚀